In today’s Computing Power era, computing power plays a urgent part in forming our innovative headways and changing different angles of our lives. From smartphones and individual Computing Power to supercomputers and cloud Computing Power , understanding the concept of computing control is fundamental. This comprehensive guide investigates the law of computing power and provides experiences into how it works, enabling us to harness its potential for innovation and progress.

Understanding Computing Power:

1.1. Definition: Define computing control as the capability of a computer system to perform complex calculations, process vast sums of data, and execute errands at tall speeds.

1.2. Components: Explore the key components that contribute to computing control, such as the processor (CPU), memory (Ram), storage (difficult drives, SSDs), and graphics handling units (GPUs).

1.3. Measuring Computing Control: Discuss common metrics utilized to measure computing power, including clock speed, cores, cache size, and benchmarks like FLOPS (Floating-Point Operations Per Second).

Moore’s Law and its Implications:

2.1. Introduction to Moore’s Law: Explain Moore’s Law, which states that the number of transistors on a microchip copies around each two a long time, leading to exponential growth in computing power over time.

2.2. Suggestions of Moore’s Law: Discuss the impact of Moore’s Law on the technology industry, including increased performance, miniaturization of gadgets, reduced costs, and enabling advancements in areas like manufactured intelligence, huge data analytics, and scientific simulations.

Computing Power in Practice:

3.1. Personal Computing: Investigate how computing control has revolutionized individual computing, driving to faster and more proficient gadgets able to handle complex tasks like gaming, multimedia editing, and virtual reality.

3.2. Scientific and Research Applications: Highlight the role of high-performance computing (HPC) and supercomputers in progressing logical inquiry about, climate estimating, medicare disclosure, and simulations in fields like astrophysics, genomics, and climate modeling.

3.3. Cloud Computing: Discuss the emergence of cloud computing, where computing control is gotten remotely over the web, empowering adaptable and on-demand resources for businesses, information storage, and software applications.

3.4. Counterfeit Insights and Machine Learning: Clarify how computing control powers the improvement of counterfeit insights (AI) and machine learning (ML) calculations, allowing for complex design recognition, normal dialect preparation, and predictive analytics.

Advancements and Future Trends:

4.1. Quantum Computing: Introduce the concept of quantum computing, a revolutionary approach that harnesses quantum mechanics to perform computations exponentially faster than classical computers, opening up new conceivable outcomes in cryptography, optimization, and solving complex problems.

4.2. Edge Computing: Examine the rise of edge computing, where computing control is decentralized and brought closer to the information source, lessening inactivity and empowering real-time processing for applications like Internet of Things (IoT), autonomous vehicles, and smart cities.

4.3. Economical Computing: Address the importance of energy-efficient computing and investigate advancements in reducing power utilization, optimizing algorithms, and utilizing renewable energy sources to make computing more sustainable.

Understanding the law of computing control is significant for opening its potential in driving mechanical progress and innovation. From personal computing to logical inquire about and emerging trends like quantum computing and edge computing, computing power proceeds to shape our world. By grasping its standards and staying educated almost progressions, able to tackle its potential to impel us into a future where computing control is harnessed mindfully and contributes to a more connected, intelligent, and sustainable world.

Computing power refers to the capability of a computer system to perform calculations, process data, and execute assignments proficiently. Here’s how computing power works:

Processor (CPU):

The central preparing unit (CPU) is the brain of a computer. It performs informational and calculations fundamental for different assignments. The CPU comprises different centers, each competent of executing information autonomously. Higher center checks permit for parallel handling and quicker assignment execution.

Clock Speed:

Clock speed, measured in gigahertz (GHz), decides how much information a CPU can execute per moment. Higher clock speeds result in faster processing, permitting tasks to be completed more quickly. However, clock speed isn’t the sole figure determining computing power.

Cache:

CPU cache is high-speed memory found closer to the processor. It stores habitually gotten information and information, decreasing the time required to fetch them from the most memory. Bigger cache sizes empower speedier information recovery, moving forward by and large performance.

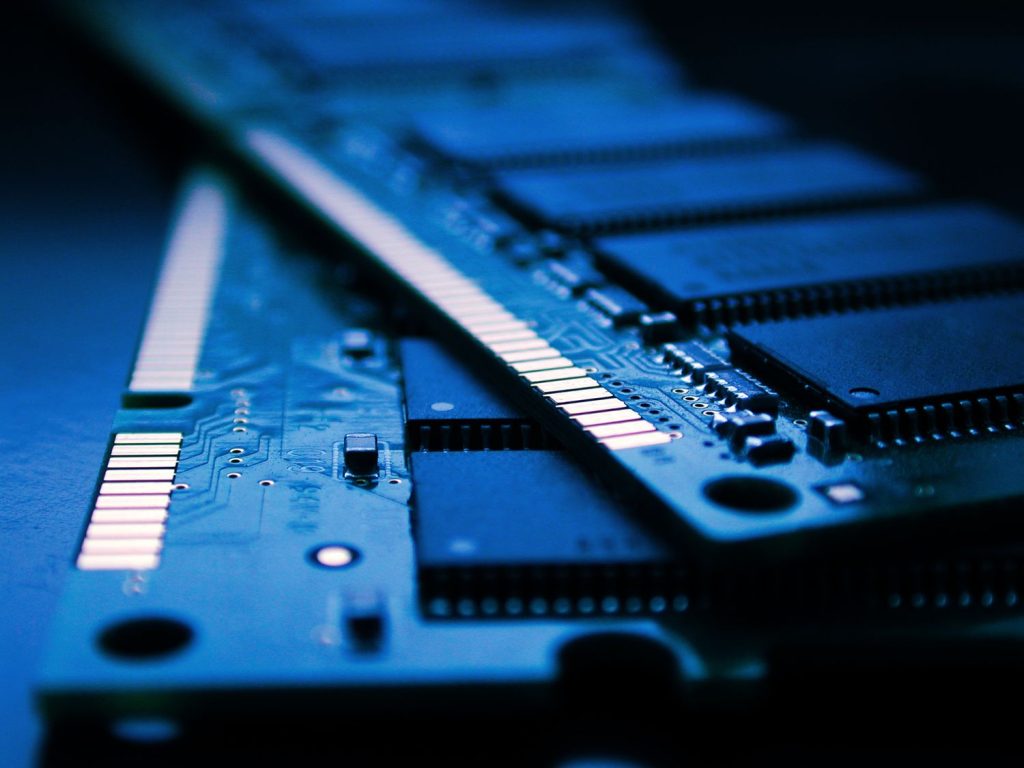

Memory (RAM):

Random-access memory (RAM) stores information that the CPU should get to rapidly. It gives transitory capacity for running applications and information in dynamic utilization. Bigger Smash capacities permit for more information to be held, reducing the need for frequent data transfers and improving multitasking capabilities.

Storage:

Storage devices, such as difficult drives (HDD) and solid-state drives (SSD), store data for all time. HDDs offer bigger storage capacities at a lower cost, whereas SSDs provide faster read/write speeds and improved framework responsiveness.

Graphics Processing Unit (GPU):

The GPU specializes in rendering and controlling pictures, recordings, and complex illustrations. GPUs exceed expectations at parallel handling, making them profitable for errands such as gaming, interactive media altering, and logical simulations.

Distributed Computing:

Distributed computing utilizes multiple computers or processors to work together on a single assignment. This approach allows for quicker processing and tackling large-scale problems by dividing them into smaller parts that can be processed simultaneously.

Advancements in Technology:

Advancements in processor plan, creation procedures, and semiconductor materials contribute to progressed computing control. Mechanical advance has led to smaller transistors, increased transistor density, and improved vitality efficiency, enabling faster and more efficient computing.

Software Optimization:

Efficient software programming and optimization strategies play a vital part in maximizing computing control. Engineers utilize calculations, parallel processing, and task scheduling to form the foremost effective utilization of available computing resources.

Moore’s Law:

Moore’s Law, an observation by Gordon Moore, states that the number of transistors on a microchip copies roughly each two a long time. This exponential growth has historically driven to noteworthy increases in computing power over time, enabling advancements in different fields.

By leveraging the components said over, optimizing computer programs, and profiting from continuous innovative progressions, computing control proceeds to advance and shape the advanced scene. The journey for more capable and productive computing frameworks remains a driving drive in empowering developments, tackling complex issues, and improving our advanced encounters.